|

I am a Ph.D. student at Institute of Information Enginering, Chinese Academy of Sciences in Beijing, advised by Prof. Zheng Lin. Previously, I was a research intern in the Pattern Recognition Center (PRC), WeChat AI at Tencent, supervised by Fandong Meng in 2021-2022. My research interests include dialogue generation, large language models, and related applications. Please reach out to me via email: yangchenxu@iie.ac.cn. |

|

|

|

Google Scholar / Semantic Scholar / DBLP (*: Equal contribution) |

|

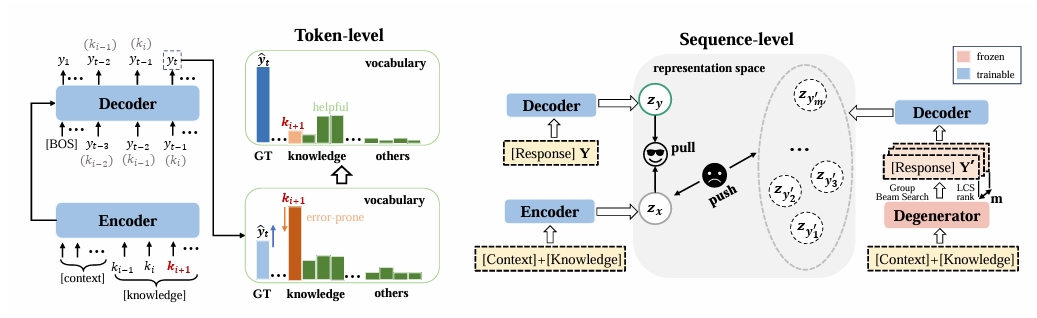

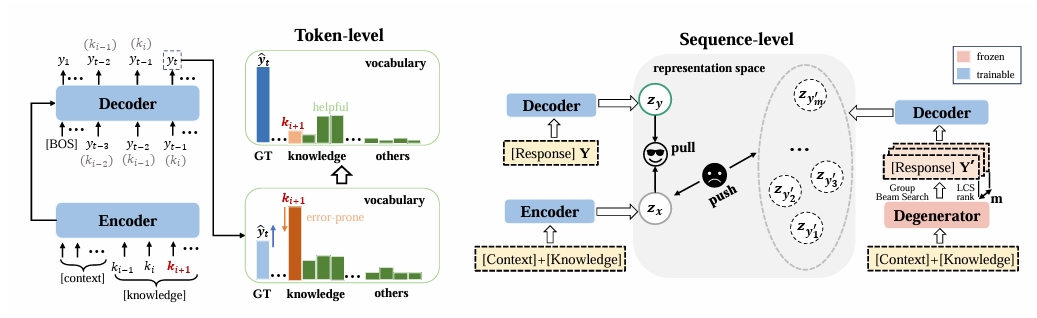

Chenxu Yang, Zheng Lin, Lanrui Wang, Chong Tian, Liang Pang, Jiangnan Li, Qirong Ho, Yanan Cao, Weiping Wang EMNLP, 2023 (Main Conference) pdf / code In this work, we find that such copying-style degeneration is primarily due to the weak likelihood objective, which allows the model to "cheat" the objective by merely duplicating knowledge snippets in a superficial pattern matching manner based on overlap. To overcome this challenge, we propose a Multi-level Adaptive Contrastive Learning (MACL) framework that dynamically samples negative examples and subsequently penalizes degeneration behaviors at both the token-level and sequence-level. Extensive experiments on the WoW dataset demonstrate the effectiveness of our approach across various pre-trained models and decoding strategies. |

|

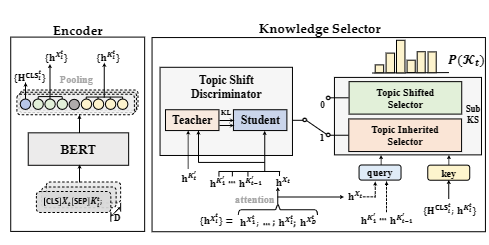

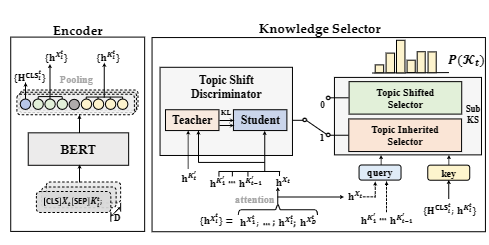

Chenxu Yang, Zheng Lin, Jiangnan Li, Fandong Meng, Weiping Wang, Lanrui Wang, Jie Zhou COLING, 2022 (Main Conference) pdf / code For the KGDG task, we find that the topic shift triggers knowledge alteration, and propose a Topic-shift Aware Knowledge sElector(TAKE) to better locate the relevant parts from the dialogue history at an opportune moment. Experimental results on WoW dataset show that compared with strong baselines, TAKE not only selects knowledge more accurately especially on the unseen test set, but also generates more informative responses on both automatic and human evaluation metrics. |

|

Design and source code from Jon Barron's website |